The history of computing

October 12th, 2025

- The mechanical era (3000 BCE - 1930s)

- The electronic revolution (1930s - 1970s)

- The microprocessor and personal computing era (1970s - 1990s)

- The internet age and mobile revolution (2000s - 2010s)

- Where we are now (2020s)

- The future of computing

The word "computing"From Latin "computare", meaning "to calculate, count, reckon". Formed from "com-" (together, with) + "putare" (to reckon, consider, think). Originally meant the act of calculation using any method. In the 17th century, "computer" referred to a person who performed calculations, often employed to create mathematical tables. The term shifted to machines in the mid-20th century. Today, computing encompasses not just arithmetic but information processing, data manipulation, and algorithmic problem-solving across all domains. comes from Latin "computare", to calculate or count. For most of history, computers were people, not machines. Computing didn't begin with silicon chips or the internet. It began thousands of years ago with humans trying to solve a fundamental problem: how to count, calculate, and process information faster than the human mind alone could manage.

From mechanical gears to quantum bits, the evolution of computing represents humanity's relentless drive to extend the capabilities of human thought. This is that story.

The mechanical era (3000 BCE - 1930s):

The earliest computing devices were mechanical. The abacus, developed around 3000 BCE in MesopotamiaAncient region in modern-day Iraq and parts of Syria, Turkey, and Iran. Often called "the cradle of civilisation", it was home to the Sumerians, Babylonians, and Assyrians. The name comes from Greek meaning "between rivers" (the Tigris and Euphrates)., allowed merchants and accountants to perform arithmetic operations by sliding beads along rods. For millennia, this remained the dominant calculation tool across civilisationsComplex societies with organised government, agriculture, trade, and written language. Examples include: Ancient Egypt (pyramids, hieroglyphics), Rome (roads, aqueducts, law), China (Great Wall, papermaking), Greece (democracy, philosophy), and the Maya (astronomy, mathematics). from China to Rome.

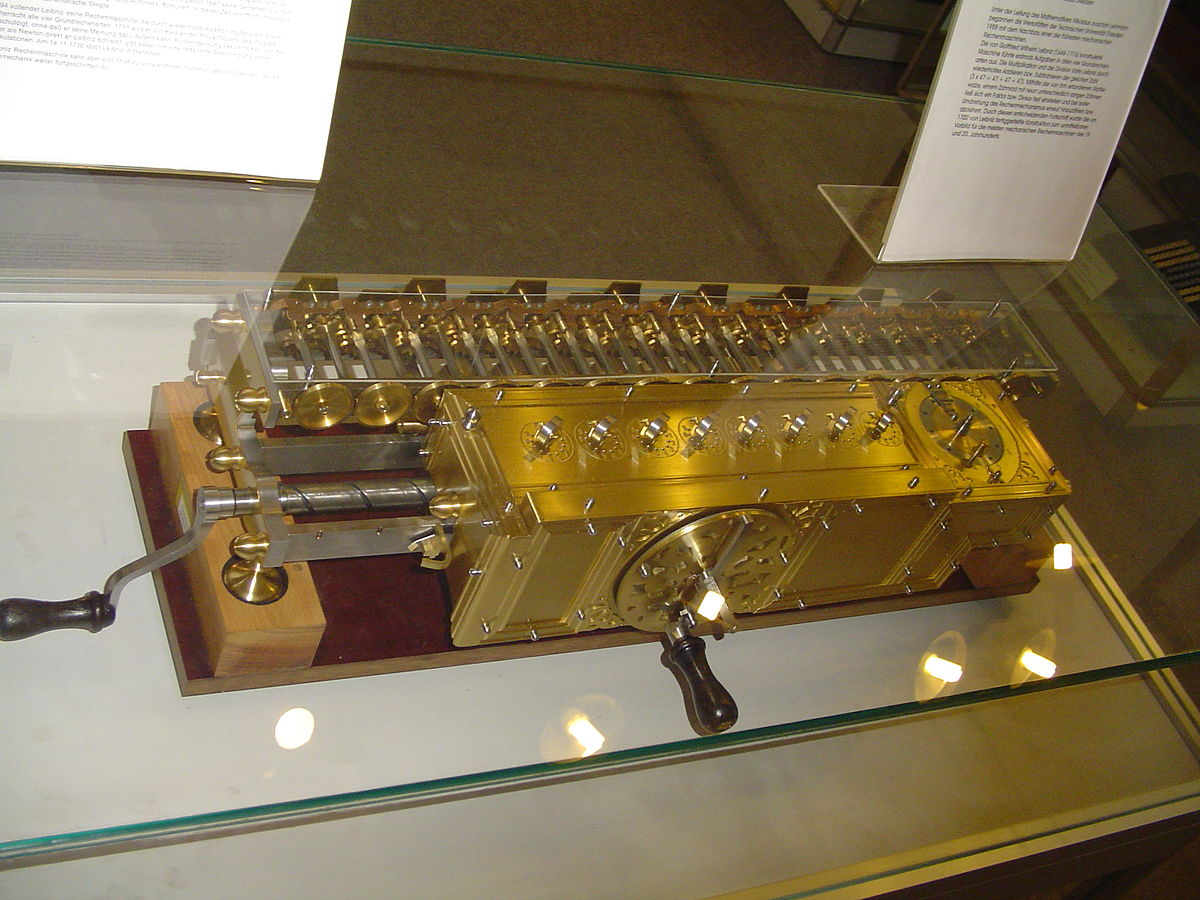

In 1642, Blaise PascalFrench mathematician, physicist, and philosopher (1623-1662). Child prodigy who made major contributions to geometry, probability theory, and fluid mechanics. Also known for his religious philosophy, particularly "Pascal's Wager". invented the PascalineThe first mechanical calculator, invented when Pascal was just 19 to help his father with tax calculations. It used a series of numbered wheels connected by gears.  , the first mechanical calculator capable of addition and subtraction through a series of interlocking gearsToothed wheels that mesh together. When one gear turns, its teeth push against the teeth of the next gear, causing it to rotate. By connecting gears of different sizes, you can multiply or reduce motion. In the Pascaline, turning a gear representing units would automatically turn the tens gear when reaching 10, just like carrying numbers in arithmetic.. Thirty years later, Gottfried Wilhelm LeibnizGerman mathematician and philosopher (1646-1716). Co-inventor of calculus (with Newton), developed binary number system, and made contributions to logic, physics, and philosophy. His motto was "the best of all possible worlds". improved upon this design with the Stepped ReckonerLeibniz's mechanical calculator (1673) that could add, subtract, multiply, and divide. It used a special cylinder with teeth of increasing lengths (the "Leibniz wheel") to perform multiplication through repeated addition.

, the first mechanical calculator capable of addition and subtraction through a series of interlocking gearsToothed wheels that mesh together. When one gear turns, its teeth push against the teeth of the next gear, causing it to rotate. By connecting gears of different sizes, you can multiply or reduce motion. In the Pascaline, turning a gear representing units would automatically turn the tens gear when reaching 10, just like carrying numbers in arithmetic.. Thirty years later, Gottfried Wilhelm LeibnizGerman mathematician and philosopher (1646-1716). Co-inventor of calculus (with Newton), developed binary number system, and made contributions to logic, physics, and philosophy. His motto was "the best of all possible worlds". improved upon this design with the Stepped ReckonerLeibniz's mechanical calculator (1673) that could add, subtract, multiply, and divide. It used a special cylinder with teeth of increasing lengths (the "Leibniz wheel") to perform multiplication through repeated addition.  , which could also multiply and divide. These machines were marvels of precision engineering, but they were single-purpose devices, incapable of being programmed for different tasks.

, which could also multiply and divide. These machines were marvels of precision engineering, but they were single-purpose devices, incapable of being programmed for different tasks.

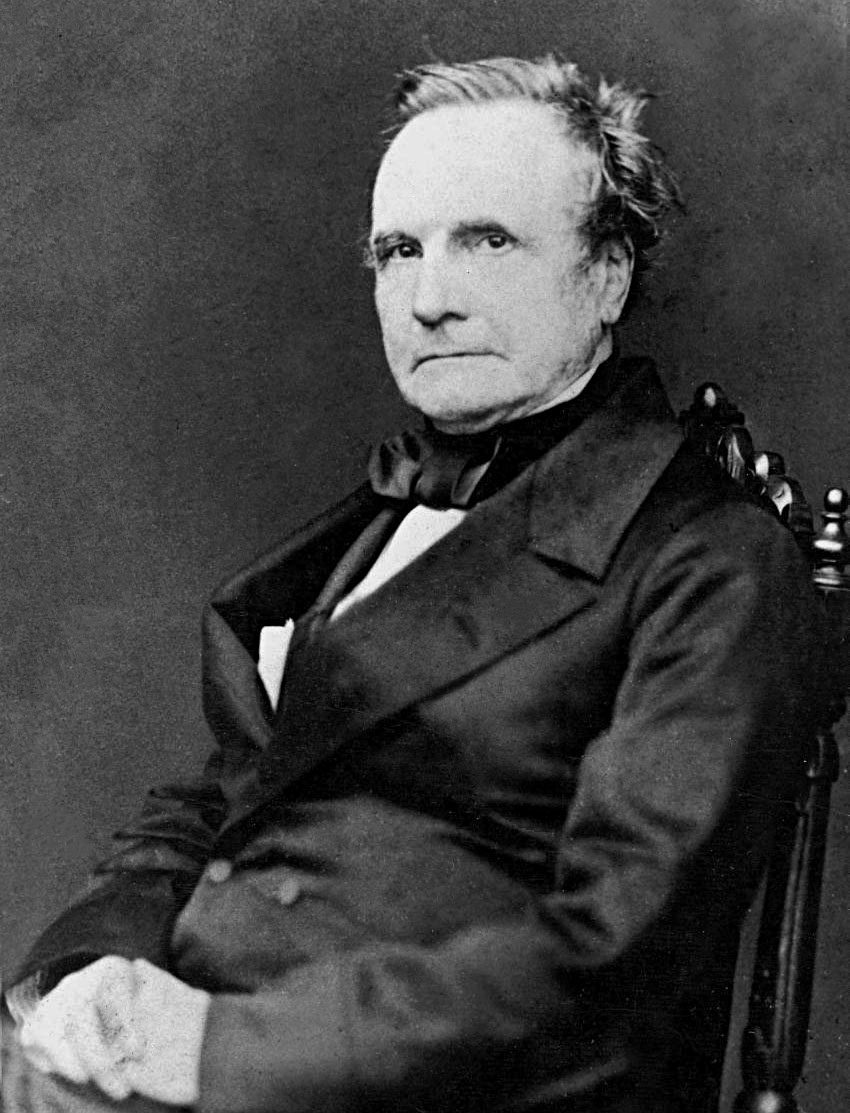

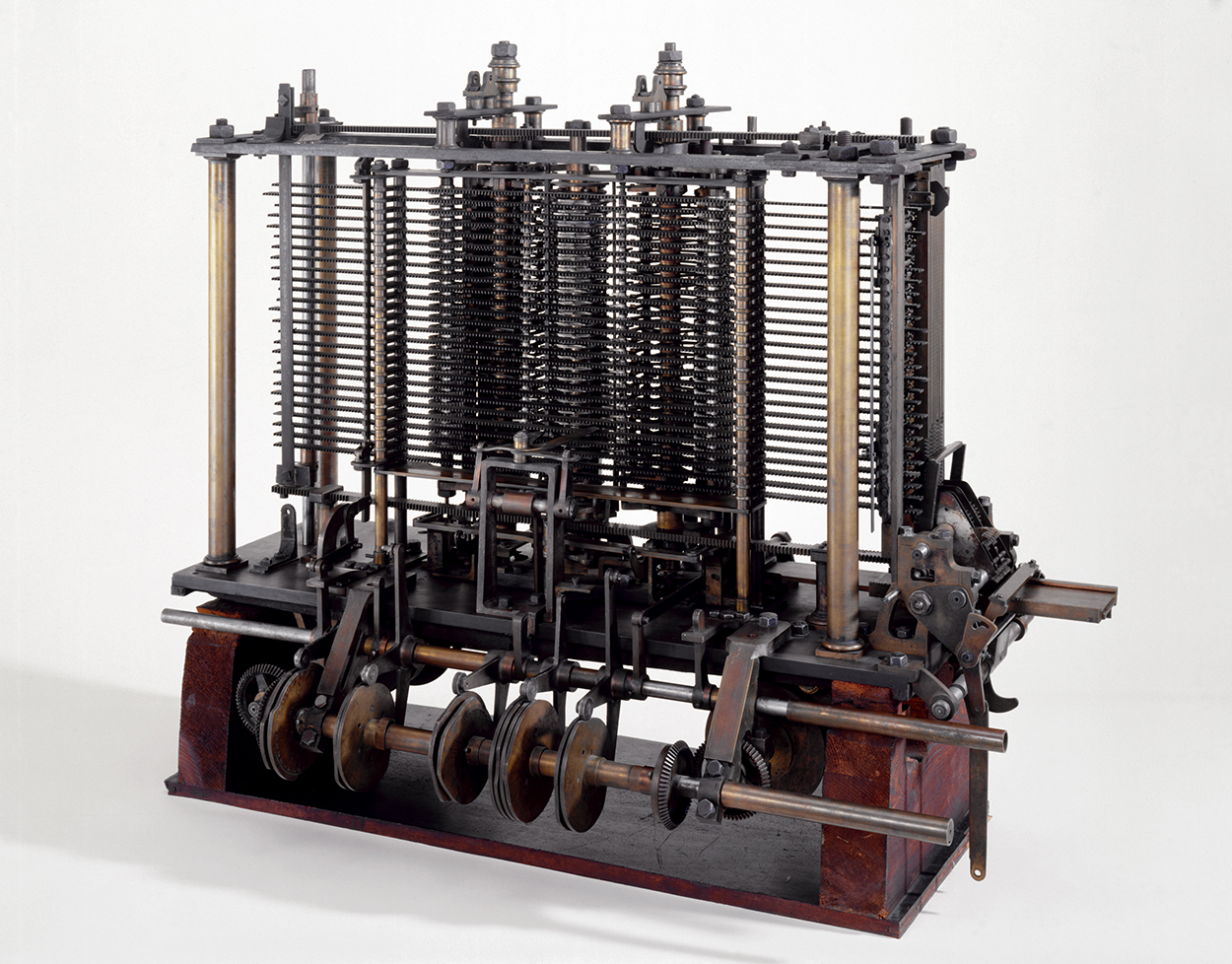

The true conceptual breakthrough came in 1837 when Charles BabbageEnglish mathematician and inventor (1791-1871), often called "father of the computer". Frustrated by errors in mathematical tables, he designed mechanical computers that were a century ahead of their time. Though his machines were never completed due to manufacturing limitations and funding issues, his designs contained all the principles of modern computing.  designed the Analytical EngineBabbage's design for a fully programmable mechanical computer (1837). It would have been steam-powered, the size of a room, and capable of any calculation that could be described algorithmically. It featured punched card input (borrowed from Jacquard looms), a "mill" for calculations, a "store" for memory, and conditional branching. Though never built, it contained every logical element of a modern computer, conceived 100 years before electronic computers existed.

designed the Analytical EngineBabbage's design for a fully programmable mechanical computer (1837). It would have been steam-powered, the size of a room, and capable of any calculation that could be described algorithmically. It featured punched card input (borrowed from Jacquard looms), a "mill" for calculations, a "store" for memory, and conditional branching. Though never built, it contained every logical element of a modern computer, conceived 100 years before electronic computers existed.  , a mechanical computer that could be programmed using punched cards. Though never completed in his lifetime due to manufacturing limitations, Babbage's design contained all the essential elements of a modern computer: memory (the "store"), processing unit (the "mill"), input/output mechanisms, and conditional branching. Ada LovelaceAda King, Countess of Lovelace (1815-1852), daughter of poet Lord Byron. Mathematician and writer who worked with Babbage on the Analytical Engine. She wrote the first algorithm intended for machine processing (computing Bernoulli numbers) and envisioned computers could go beyond pure calculation to compose music, produce graphics, and manipulate symbols. She's considered the world's first computer programmer.

, a mechanical computer that could be programmed using punched cards. Though never completed in his lifetime due to manufacturing limitations, Babbage's design contained all the essential elements of a modern computer: memory (the "store"), processing unit (the "mill"), input/output mechanisms, and conditional branching. Ada LovelaceAda King, Countess of Lovelace (1815-1852), daughter of poet Lord Byron. Mathematician and writer who worked with Babbage on the Analytical Engine. She wrote the first algorithm intended for machine processing (computing Bernoulli numbers) and envisioned computers could go beyond pure calculation to compose music, produce graphics, and manipulate symbols. She's considered the world's first computer programmer.  , working with Babbage, wrote what is now recognised as the first computer algorithm, envisioning that such machines could go beyond pure calculation to manipulate symbols and create music or art.

, working with Babbage, wrote what is now recognised as the first computer algorithm, envisioning that such machines could go beyond pure calculation to manipulate symbols and create music or art.

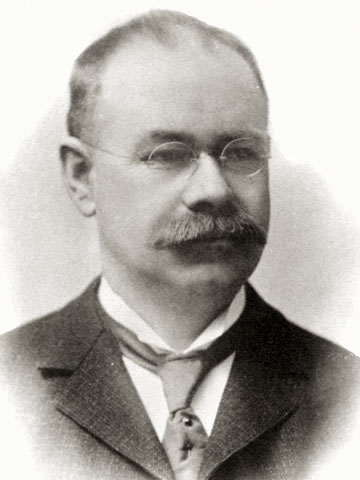

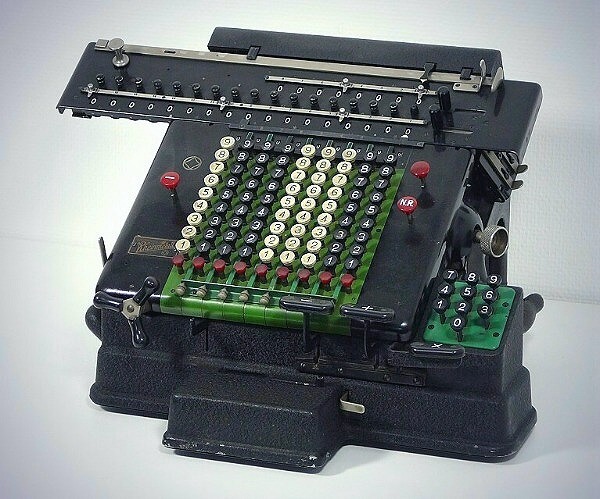

Herman HollerithAmerican inventor and statistician (1860-1929) who revolutionised data processing. Frustrated by the slow manual census counting process, he invented an electromechanical tabulation system using punched cards. His company, the Tabulating Machine Company, merged with others to form IBM in 1924. His inventions laid the groundwork for the data processing industry.  brought punched card computingA method of storing and processing data where holes punched in specific positions on cards represent information. Each card represents one record (like a person in a census). Machines could "read" the cards by detecting where holes were punched, sort cards automatically, and tabulate results. This system dominated data processing from the 1890s through the 1970s. to practical fruition with his tabulating machineHollerith's electromechanical device (1890) that could automatically read, sort, and count data from punched cards. It used electrical contacts: when pins passed through holes in cards, they completed circuits that advanced mechanical counters. This reduced the 1890 US Census processing time from 8 years to just 1 year, processing data for 62 million people.

brought punched card computingA method of storing and processing data where holes punched in specific positions on cards represent information. Each card represents one record (like a person in a census). Machines could "read" the cards by detecting where holes were punched, sort cards automatically, and tabulate results. This system dominated data processing from the 1890s through the 1970s. to practical fruition with his tabulating machineHollerith's electromechanical device (1890) that could automatically read, sort, and count data from punched cards. It used electrical contacts: when pins passed through holes in cards, they completed circuits that advanced mechanical counters. This reduced the 1890 US Census processing time from 8 years to just 1 year, processing data for 62 million people.  , used in the 1890 United States Census. His company would eventually become IBMInternational Business Machines Corporation. Formed in 1924 from a merger that included Hollerith's Tabulating Machine Company. Started by making punch card tabulators and scales, IBM became one of the most influential technology companies, dominating mainframe computers in the mid-20th century and remaining a major force in enterprise computing, AI, and quantum computing today.. By the early 20th century, mechanical and electromechanical calculatorsCalculating machines that combine mechanical parts (gears, levers) with electrical components (motors, relays, solenoids). Unlike purely mechanical calculators operated by hand cranks, these used electric motors for power and electromagnetic relays as switches. They were faster and could handle more complex calculations, but still used physical moving parts rather than pure electronics.

, used in the 1890 United States Census. His company would eventually become IBMInternational Business Machines Corporation. Formed in 1924 from a merger that included Hollerith's Tabulating Machine Company. Started by making punch card tabulators and scales, IBM became one of the most influential technology companies, dominating mainframe computers in the mid-20th century and remaining a major force in enterprise computing, AI, and quantum computing today.. By the early 20th century, mechanical and electromechanical calculatorsCalculating machines that combine mechanical parts (gears, levers) with electrical components (motors, relays, solenoids). Unlike purely mechanical calculators operated by hand cranks, these used electric motors for power and electromagnetic relays as switches. They were faster and could handle more complex calculations, but still used physical moving parts rather than pure electronics.  were common in offices, but they remained large, expensive, and limited in capability.

were common in offices, but they remained large, expensive, and limited in capability.

The electronic revolution (1930s - 1970s):

The shift from mechanical to electronic computing was driven by World War II's demand for rapid calculation. Breaking enemy codesCryptanalysis, the science of decrypting secret messages without the key. During WWII, both sides used complex encryption machines. The most famous were Germany's Enigma (used by military) and Lorenz (used by High Command). These machines scrambled messages using rotating wheels and electrical circuits, creating billions of possible configurations. Codebreakers were mathematicians, linguists, and chess players who looked for patterns, exploited mechanical flaws, and used early computers to test possibilities. Breaking these codes gave the Allies advance knowledge of German plans, ship movements, and strategies. Historians estimate codebreaking shortened the war by 2 to 4 years, saving millions of lives., calculating artillery trajectories, and designing atomic weapons required computational power far beyond what mechanical devices could provide.

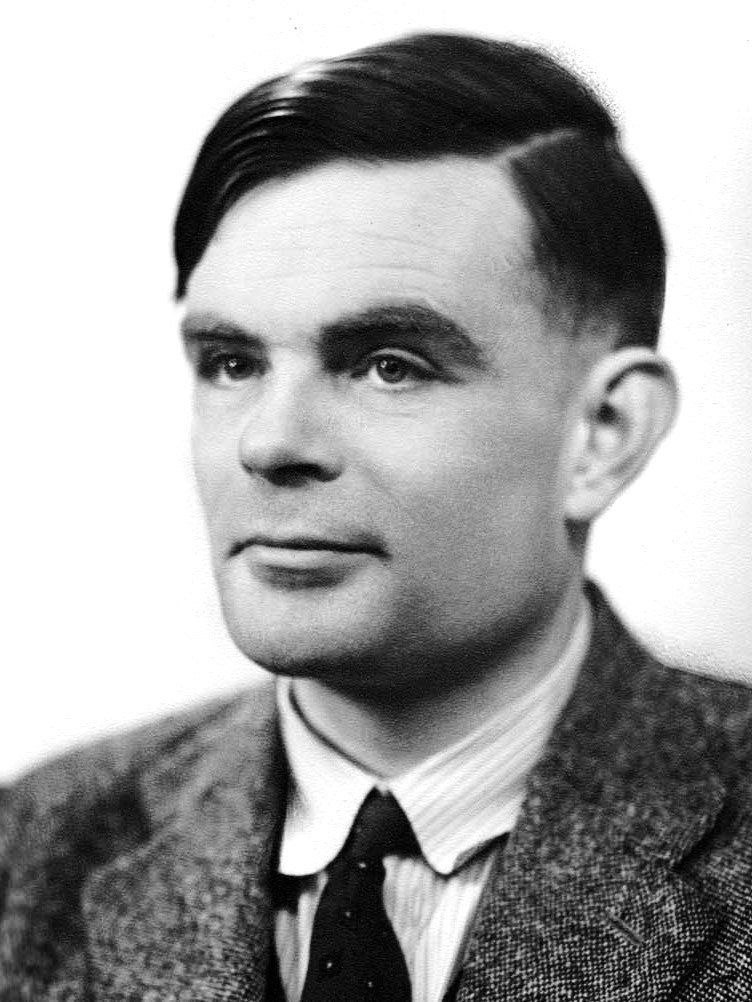

In 1936, Alan TuringBritish mathematician, logician, and cryptanalyst (1912-1954). Considered the father of theoretical computer science and artificial intelligence. During WWII, he led the team that cracked the German Enigma code, potentially shortening the war by years. Tragically persecuted for being gay, he died at 41. His work on computability, the Turing Test, and code-breaking fundamentally shaped modern computing.  published his seminal paper"On Computable Numbers, with an Application to the Entscheidungsproblem" (1936). Seminal means groundbreaking or highly influential work that shapes an entire field. This paper introduced the concept of a universal computing machine and proved fundamental limits of computation. It's one of the most important papers in computer science history, establishing the theoretical foundations decades before actual computers existed. describing the Turing MachineA theoretical mathematical model, not a physical device. Imagine an infinitely long tape divided into cells, a read/write head that can move left or right, and a set of rules. The head reads a symbol, writes a new symbol, moves, and changes "state" according to rules. Despite being extremely simple, it can simulate any algorithm that any computer can perform. It's a thought experiment that defined what "computation" fundamentally means.

published his seminal paper"On Computable Numbers, with an Application to the Entscheidungsproblem" (1936). Seminal means groundbreaking or highly influential work that shapes an entire field. This paper introduced the concept of a universal computing machine and proved fundamental limits of computation. It's one of the most important papers in computer science history, establishing the theoretical foundations decades before actual computers existed. describing the Turing MachineA theoretical mathematical model, not a physical device. Imagine an infinitely long tape divided into cells, a read/write head that can move left or right, and a set of rules. The head reads a symbol, writes a new symbol, moves, and changes "state" according to rules. Despite being extremely simple, it can simulate any algorithm that any computer can perform. It's a thought experiment that defined what "computation" fundamentally means.  , a theoretical device that could simulate any algorithmic process. Though purely conceptual, it established the fundamental limits of computation and proved that certain problems are inherently unsolvableTuring proved some problems cannot be solved by any algorithm, no matter how powerful the computer. Example: The Halting Problem asks "can we write a program that determines if any given program will eventually stop or run forever?" Turing proved this is impossible. Why? Suppose such a program H exists. Create a program P that does the opposite of what H predicts. If H says P halts, P loops forever. If H says P loops, P halts. This contradiction proves H cannot exist. Other unsolvable problems: determining if two programs are equivalent, whether a program will ever reach a certain line of code, or whether a mathematical statement is provable. We know they're unsolvable through similar logical contradictions called "reductions". by any algorithm. This work laid the theoretical foundation for all modern computing.

, a theoretical device that could simulate any algorithmic process. Though purely conceptual, it established the fundamental limits of computation and proved that certain problems are inherently unsolvableTuring proved some problems cannot be solved by any algorithm, no matter how powerful the computer. Example: The Halting Problem asks "can we write a program that determines if any given program will eventually stop or run forever?" Turing proved this is impossible. Why? Suppose such a program H exists. Create a program P that does the opposite of what H predicts. If H says P halts, P loops forever. If H says P loops, P halts. This contradiction proves H cannot exist. Other unsolvable problems: determining if two programs are equivalent, whether a program will ever reach a certain line of code, or whether a mathematical statement is provable. We know they're unsolvable through similar logical contradictions called "reductions". by any algorithm. This work laid the theoretical foundation for all modern computing.

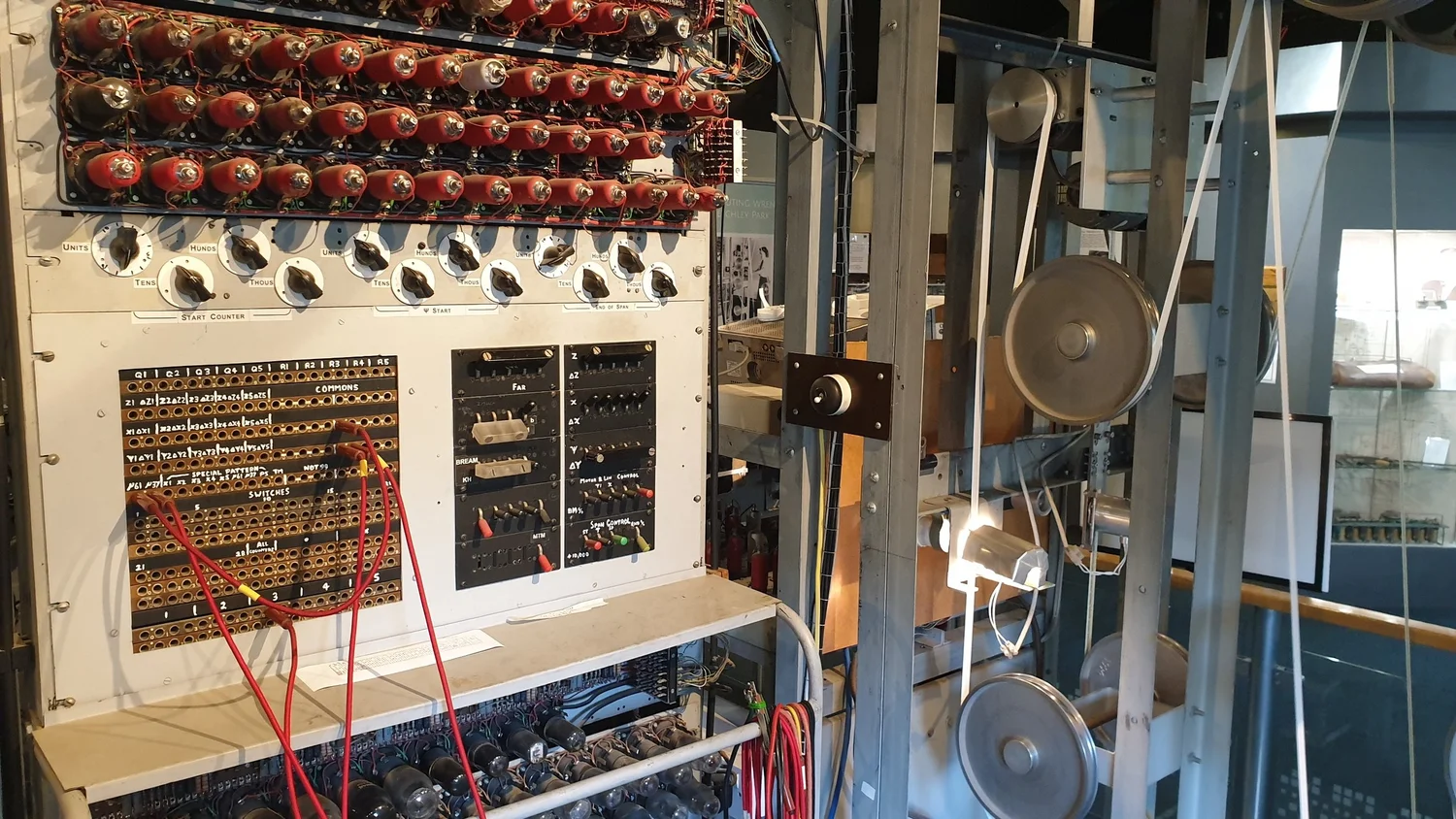

The first programmable electronicUsing the flow of electrons (electricity) rather than mechanical parts. At its core, electricity is the movement of electrons (negatively charged particles) through conductive materials. In electronic devices, we control this flow to represent information: current flowing = 1 (on), no current = 0 (off). Electronic components like vacuum tubes or transistors can switch these states millions of times per second with no moving parts, making them far faster than mechanical switches. This is why electronic computers revolutionised computing, they could perform operations at speeds impossible with gears and levers. computer was ColossusThe world's first programmable electronic computer (1943), built at Bletchley Park to crack German Lorenz cipher messages. It used 2,400 vacuum tubes to perform logical operations at 5,000 characters per second. How was it made? Engineer Tommy Flowers convinced his superiors (initially sceptical of using so many unreliable vacuum tubes) to let him build it. His team worked in secrecy for 10 months, combining telephone exchange technology, vacuum tube electronics, and paper tape readers. The machine was programmed by plugboards and switches, no stored programs yet. Its existence was kept secret until the 1970s. Ten Colossi were eventually built, directly contributing to Allied victory by decrypting German military communications.  , built in 1943 by British codebreakersIntelligence specialists who decrypt enemy communications. During WWII, Britain recruited mathematicians, linguists, crossword enthusiasts, and chess champions to Bletchley Park. These included Alan Turing, Gordon Welchman, Bill Tutte, and thousands of others (many were women). They worked in total secrecy, combining mathematical analysis, pattern recognition, linguistic intuition, and emerging computing technology. Their work wasn't just academic, each decrypted message provided actionable military intelligence. Many codebreakers couldn't tell even their families what they did until the 1970s when the work was declassified. at Bletchley ParkThe top-secret British codebreaking centre during WWII, located in Buckinghamshire, England. At its peak, over 10,000 people worked there, breaking German and Japanese codes. The site included mathematicians, engineers, linguists, and support staff, all sworn to secrecy. It housed multiple codebreaking operations, including Enigma (military codes) and Lorenz (High Command messages). The estate consisted of the main mansion plus temporary huts where teams worked around the clock. Winston Churchill called the Bletchley staff "the geese that laid the golden eggs and never cackled." Today, it's a museum.

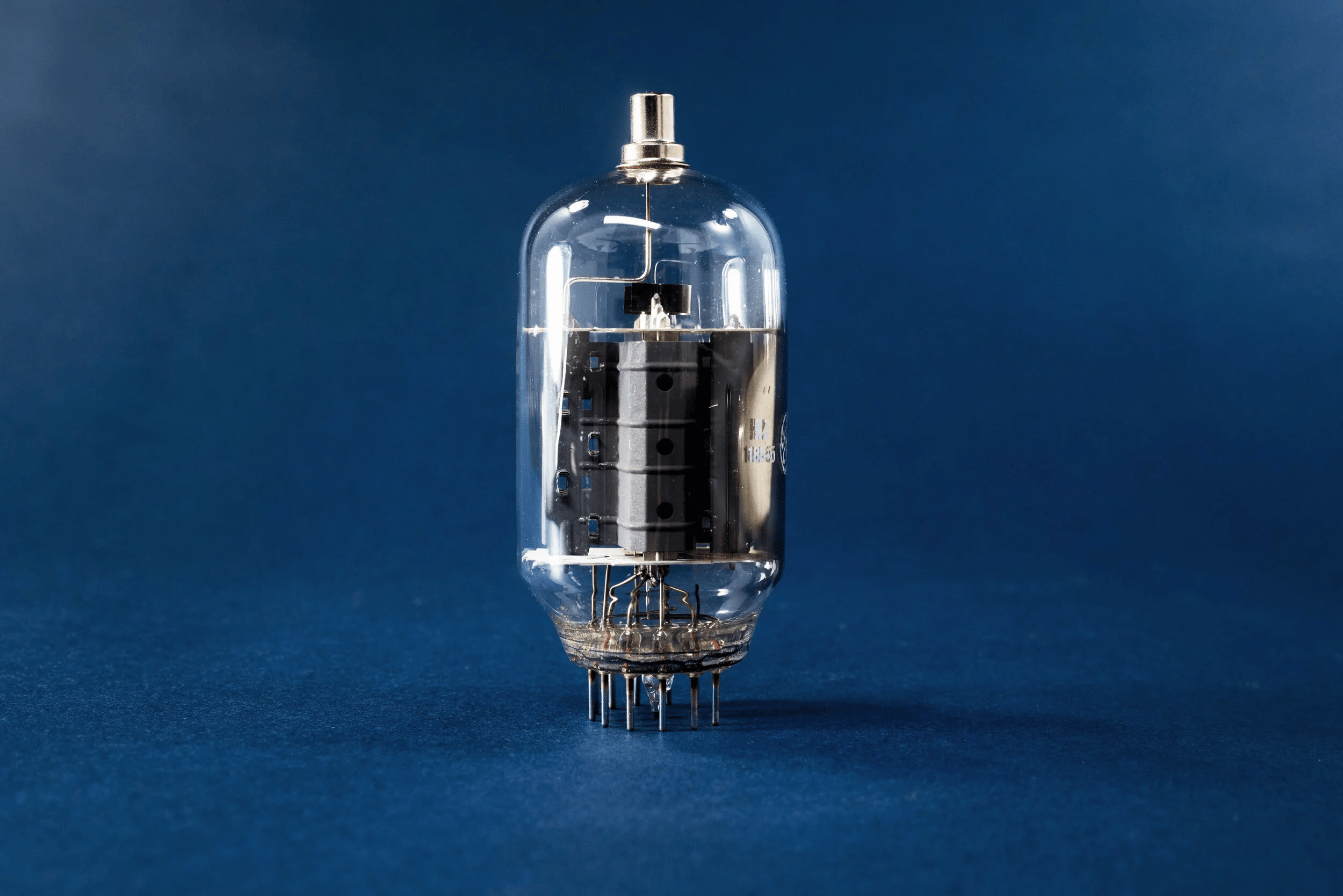

, built in 1943 by British codebreakersIntelligence specialists who decrypt enemy communications. During WWII, Britain recruited mathematicians, linguists, crossword enthusiasts, and chess champions to Bletchley Park. These included Alan Turing, Gordon Welchman, Bill Tutte, and thousands of others (many were women). They worked in total secrecy, combining mathematical analysis, pattern recognition, linguistic intuition, and emerging computing technology. Their work wasn't just academic, each decrypted message provided actionable military intelligence. Many codebreakers couldn't tell even their families what they did until the 1970s when the work was declassified. at Bletchley ParkThe top-secret British codebreaking centre during WWII, located in Buckinghamshire, England. At its peak, over 10,000 people worked there, breaking German and Japanese codes. The site included mathematicians, engineers, linguists, and support staff, all sworn to secrecy. It housed multiple codebreaking operations, including Enigma (military codes) and Lorenz (High Command messages). The estate consisted of the main mansion plus temporary huts where teams worked around the clock. Winston Churchill called the Bletchley staff "the geese that laid the golden eggs and never cackled." Today, it's a museum.  to crack German Lorenz cipherAn advanced German encryption system used by Hitler and the High Command for the most secret communications, more complex than Enigma. The Lorenz machine used 12 rotating wheels to scramble teleprinter messages, creating trillions of possible settings. Unlike Enigma (broken partly through captured machines and operator errors), Lorenz was reverse-engineered entirely from intercepted messages by mathematician Bill Tutte in 1942. He deduced the machine's internal workings without ever seeing one. Cracking Lorenz required Colossus, the first programmable electronic computer. These decrypted messages gave the Allies insight into Hitler's strategic thinking, including advance warning of D-Day defences. messages. It used vacuum tubesGlass tubes containing electrodes in a vacuum (no air). When heated, a cathode emits electrons that flow to an anode, creating current. By adding a third electrode (grid), you can control the flow, turning it on or off extremely quickly. This acts as an electronic switch or amplifier. Vacuum tubes revolutionised electronics, enabling radios, televisions, radar, and early computers. They were fast (switching in microseconds) but generated enormous heat, were fragile (like lightbulbs), consumed lots of power, and failed frequently. A computer with thousands of tubes needed constant maintenance. Transistors eventually replaced them, being smaller, cooler, more reliable, and using far less power.

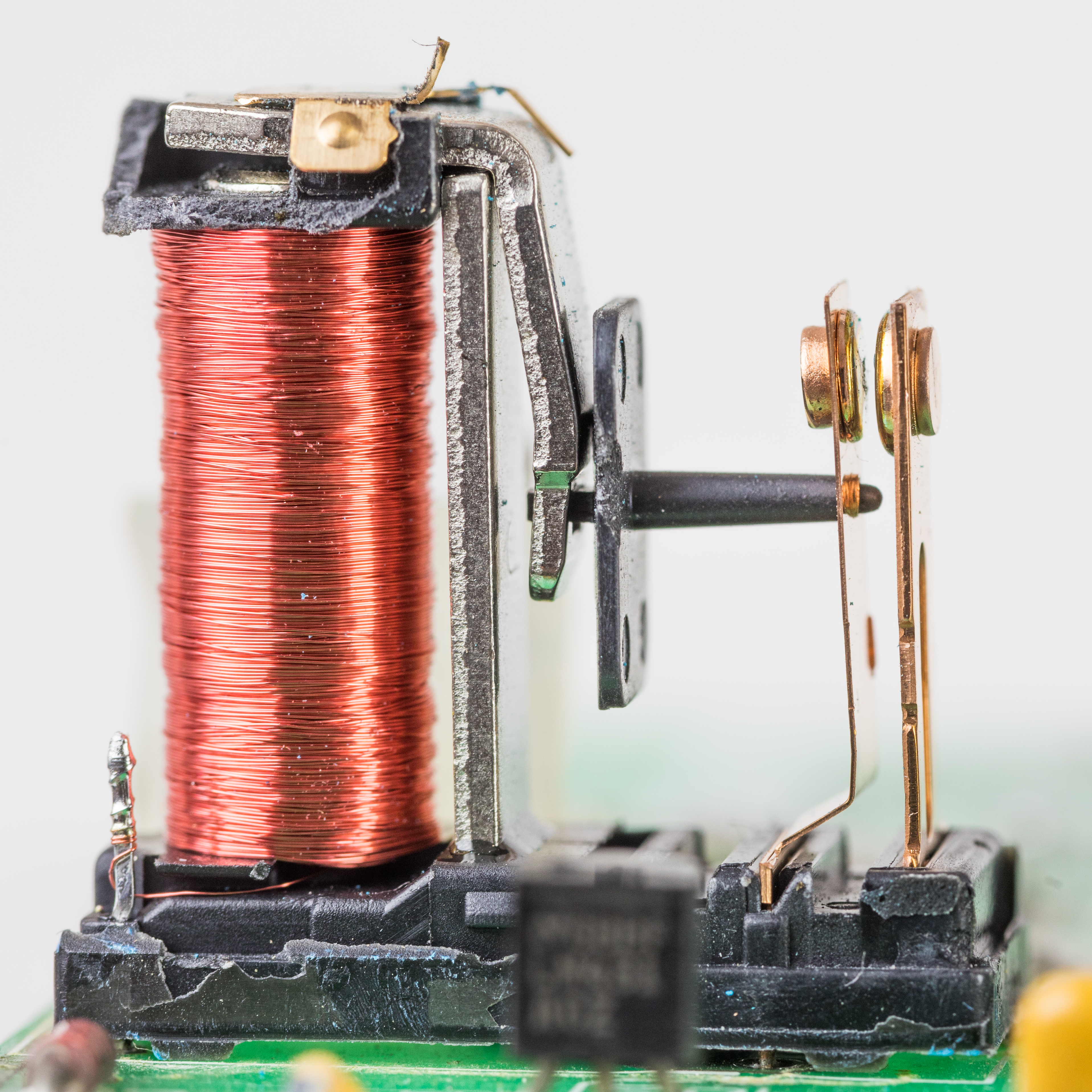

to crack German Lorenz cipherAn advanced German encryption system used by Hitler and the High Command for the most secret communications, more complex than Enigma. The Lorenz machine used 12 rotating wheels to scramble teleprinter messages, creating trillions of possible settings. Unlike Enigma (broken partly through captured machines and operator errors), Lorenz was reverse-engineered entirely from intercepted messages by mathematician Bill Tutte in 1942. He deduced the machine's internal workings without ever seeing one. Cracking Lorenz required Colossus, the first programmable electronic computer. These decrypted messages gave the Allies insight into Hitler's strategic thinking, including advance warning of D-Day defences. messages. It used vacuum tubesGlass tubes containing electrodes in a vacuum (no air). When heated, a cathode emits electrons that flow to an anode, creating current. By adding a third electrode (grid), you can control the flow, turning it on or off extremely quickly. This acts as an electronic switch or amplifier. Vacuum tubes revolutionised electronics, enabling radios, televisions, radar, and early computers. They were fast (switching in microseconds) but generated enormous heat, were fragile (like lightbulbs), consumed lots of power, and failed frequently. A computer with thousands of tubes needed constant maintenance. Transistors eventually replaced them, being smaller, cooler, more reliable, and using far less power.  instead of mechanical relaysElectromagnetic switches used in early computing and telecommunications. A relay contains a coil of wire and a movable metal contact. When electric current flows through the coil, it creates a magnetic field that pulls the contact, closing or opening a circuit. Think of it as an electrically controlled switch. Relays could represent binary states (on/off, 0/1) and perform logical operations by connecting them in circuits. However, they were slow (switching in milliseconds), noisy (you could hear rooms full of them clicking), wore out from physical movement, and were much larger than vacuum tubes. Early computers like Harvard Mark I used thousands of relays, creating massive, slow machines.

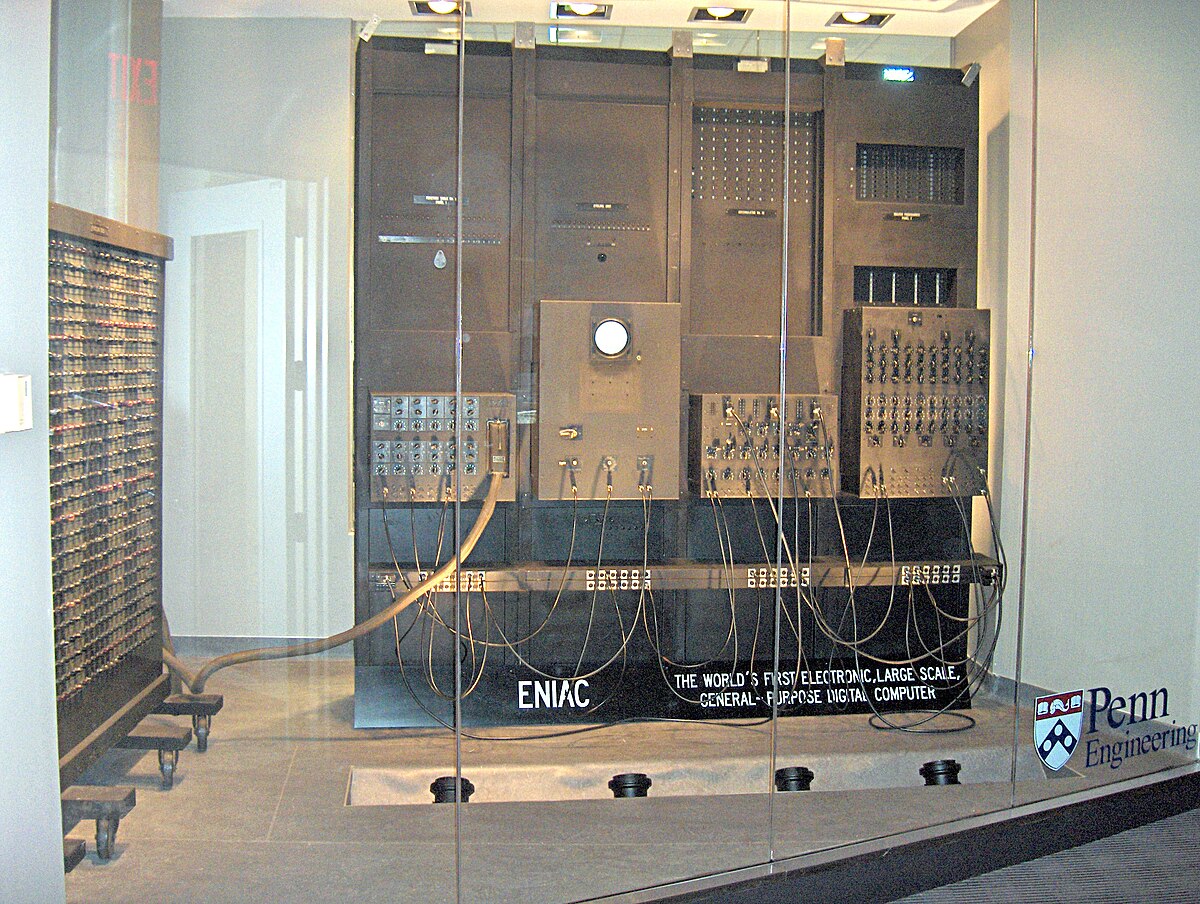

instead of mechanical relaysElectromagnetic switches used in early computing and telecommunications. A relay contains a coil of wire and a movable metal contact. When electric current flows through the coil, it creates a magnetic field that pulls the contact, closing or opening a circuit. Think of it as an electrically controlled switch. Relays could represent binary states (on/off, 0/1) and perform logical operations by connecting them in circuits. However, they were slow (switching in milliseconds), noisy (you could hear rooms full of them clicking), wore out from physical movement, and were much larger than vacuum tubes. Early computers like Harvard Mark I used thousands of relays, creating massive, slow machines.  , allowing it to process information at unprecedented speeds. Around the same time, the ENIACElectronic Numerical Integrator and Computer (1945), the first general-purpose electronic computer in the United States. Built at the University of Pennsylvania by John Mauchly and J. Presper Eckert to calculate artillery firing tables. ENIAC weighed 30 tonnes, filled a 167 square metre room, contained 17,468 vacuum tubes, 7,200 crystal diodes, 1,500 relays, 70,000 resistors, and 10,000 capacitors. It consumed 150 kilowatts of electricity (enough to power 150 homes) and generated so much heat that tubes failed constantly. Yet it could perform 5,000 additions per second, 1,000 times faster than any previous machine. Programming required physically rewiring plug boards and switches, taking days or weeks.

, allowing it to process information at unprecedented speeds. Around the same time, the ENIACElectronic Numerical Integrator and Computer (1945), the first general-purpose electronic computer in the United States. Built at the University of Pennsylvania by John Mauchly and J. Presper Eckert to calculate artillery firing tables. ENIAC weighed 30 tonnes, filled a 167 square metre room, contained 17,468 vacuum tubes, 7,200 crystal diodes, 1,500 relays, 70,000 resistors, and 10,000 capacitors. It consumed 150 kilowatts of electricity (enough to power 150 homes) and generated so much heat that tubes failed constantly. Yet it could perform 5,000 additions per second, 1,000 times faster than any previous machine. Programming required physically rewiring plug boards and switches, taking days or weeks.  (Electronic Numerical Integrator and Computer) was developed in the United States, completing in 1945. Weighing 30 tonnes and containing 17,468 vacuum tubes, ENIAC could perform 5,000 operations per second, a speed unimaginable with mechanical calculators.

(Electronic Numerical Integrator and Computer) was developed in the United States, completing in 1945. Weighing 30 tonnes and containing 17,468 vacuum tubes, ENIAC could perform 5,000 operations per second, a speed unimaginable with mechanical calculators.

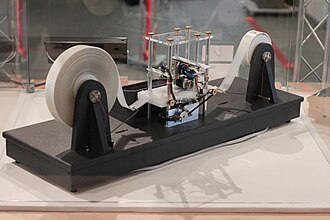

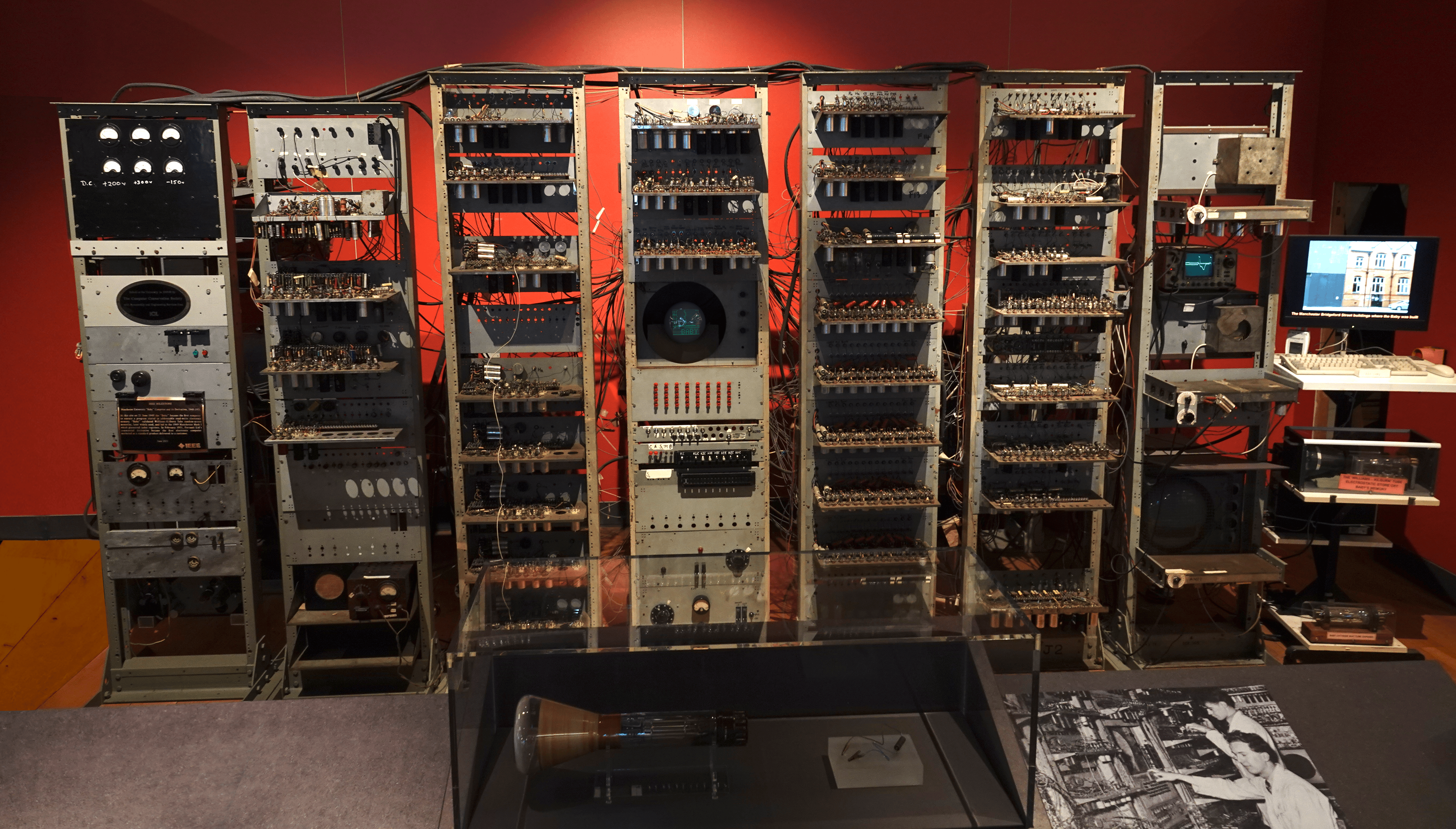

John von Neumann'sHungarian-American mathematician and polymath (1903-1957), one of the greatest minds of the 20th century. Made foundational contributions to mathematics, physics, economics, computer science, and game theory. Child prodigy who could divide eight-digit numbers in his head at age six. During WWII, he worked on the Manhattan Project. In computing, he formalised the stored-program concept that became the blueprint for virtually all computers. His architecture (fetch instruction from memory, decode it, execute it, store result) is still used today. Beyond computers, he helped develop game theory, quantum mechanics, and the mathematical framework for nuclear weapons. Died at 53 from cancer, likely caused by radiation exposure.  1945 paper describing the stored-program architectureThe fundamental design principle of modern computers where both program instructions and data are stored in the same memory. Before this, computers were programmed by physically rewiring them or using external plug boards. Von Neumann's key insight: treat instructions as data. This means you can write a program once, store it in memory, and run it repeatedly. You can also modify programs on the fly, write programs that modify other programs, and load different software without changing hardware. This architecture has three main components: CPU (executes instructions), memory (stores both programs and data), and input/output devices. The CPU repeatedly fetches an instruction from memory, decodes what it means, executes it, and moves to the next instruction. Nearly every computer you use today, from phones to supercomputers, follows this model. became the blueprint for virtually all subsequent computers. Rather than hardwiringPermanently connecting electrical circuits to perform specific functions. In early computers like ENIAC, changing the program meant literally rewiring thousands of cables and switches by hand, connecting different components together. Each new calculation required days or weeks of manual reconfiguration. Imagine having to physically rebuild your computer every time you wanted to run different software. This was practical for machines dedicated to one task (like codebreaking) but impractical for general-purpose computing. Stored-program architecture eliminated this by making the program itself data that could be loaded from memory. instructions, programs would be stored in memory alongside data, allowing computers to be reprogrammed without physical reconfigurationManually changing the hardware setup of a machine. For ENIAC, this meant people (often women mathematicians called "computers") spent days repositioning hundreds of cables and setting thousands of switches to program each new task. They had to physically walk around the room-sized machine, tracing circuit diagrams, plugging and unplugging connections. It was slow, error-prone, and physically exhausting work. Stored-program computers eliminated this, you simply loaded a different program from memory, taking seconds instead of days. This made computers practical for multiple applications.. The first stored-program computer, the Manchester BabyOfficially called the Small-Scale Experimental Machine, nicknamed "Baby" because of its small memory (32 words of 32 bits each, just 128 bytes total, about enough to store this sentence). Built at the University of Manchester by Frederic Williams, Tom Kilburn, and Geoff Tootill, it ran its first program on 21 June 1948. The name "Baby" was affectionate, reflecting both its experimental nature and limited capability, it wasn't meant to do practical work, just prove the stored-program concept worked. The program it ran took 52 minutes to find the highest factor of 262,144. Despite its tiny memory and slow speed, it demonstrated that programs could be stored electronically and modified easily. This led to the Manchester Mark 1, a practical computer, and influenced the design of all subsequent computers.

1945 paper describing the stored-program architectureThe fundamental design principle of modern computers where both program instructions and data are stored in the same memory. Before this, computers were programmed by physically rewiring them or using external plug boards. Von Neumann's key insight: treat instructions as data. This means you can write a program once, store it in memory, and run it repeatedly. You can also modify programs on the fly, write programs that modify other programs, and load different software without changing hardware. This architecture has three main components: CPU (executes instructions), memory (stores both programs and data), and input/output devices. The CPU repeatedly fetches an instruction from memory, decodes what it means, executes it, and moves to the next instruction. Nearly every computer you use today, from phones to supercomputers, follows this model. became the blueprint for virtually all subsequent computers. Rather than hardwiringPermanently connecting electrical circuits to perform specific functions. In early computers like ENIAC, changing the program meant literally rewiring thousands of cables and switches by hand, connecting different components together. Each new calculation required days or weeks of manual reconfiguration. Imagine having to physically rebuild your computer every time you wanted to run different software. This was practical for machines dedicated to one task (like codebreaking) but impractical for general-purpose computing. Stored-program architecture eliminated this by making the program itself data that could be loaded from memory. instructions, programs would be stored in memory alongside data, allowing computers to be reprogrammed without physical reconfigurationManually changing the hardware setup of a machine. For ENIAC, this meant people (often women mathematicians called "computers") spent days repositioning hundreds of cables and setting thousands of switches to program each new task. They had to physically walk around the room-sized machine, tracing circuit diagrams, plugging and unplugging connections. It was slow, error-prone, and physically exhausting work. Stored-program computers eliminated this, you simply loaded a different program from memory, taking seconds instead of days. This made computers practical for multiple applications.. The first stored-program computer, the Manchester BabyOfficially called the Small-Scale Experimental Machine, nicknamed "Baby" because of its small memory (32 words of 32 bits each, just 128 bytes total, about enough to store this sentence). Built at the University of Manchester by Frederic Williams, Tom Kilburn, and Geoff Tootill, it ran its first program on 21 June 1948. The name "Baby" was affectionate, reflecting both its experimental nature and limited capability, it wasn't meant to do practical work, just prove the stored-program concept worked. The program it ran took 52 minutes to find the highest factor of 262,144. Despite its tiny memory and slow speed, it demonstrated that programs could be stored electronically and modified easily. This led to the Manchester Mark 1, a practical computer, and influenced the design of all subsequent computers.  , ran its first program in 1948.

, ran its first program in 1948.

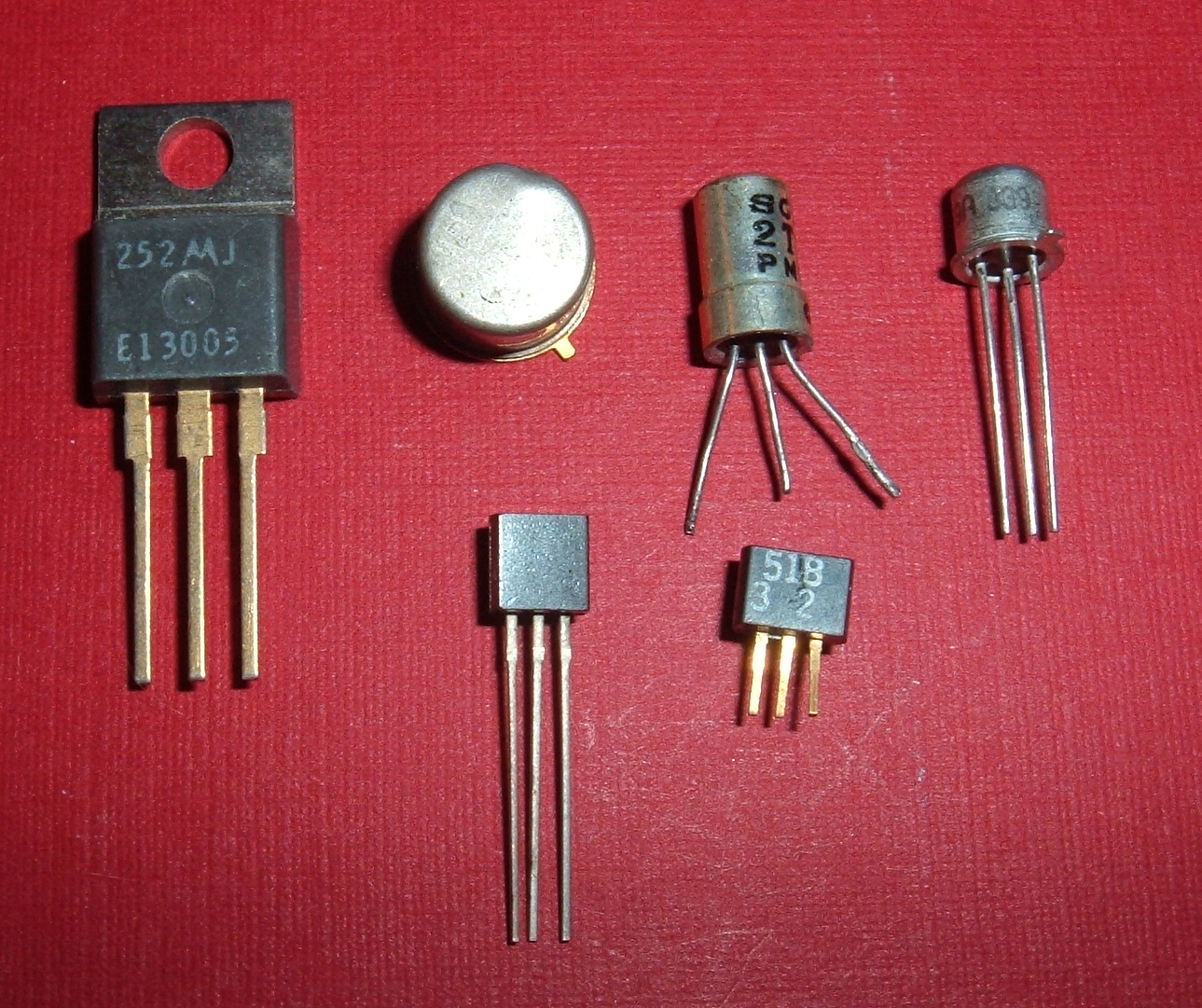

The invention of the transistorA semiconductor device that can amplify or switch electrical signals, invented in 1947 by John Bardeen, Walter Brattain, and William Shockley at Bell Labs (they won the Nobel Prize in 1956). Made from silicon or germanium (semiconductors, materials that conduct electricity better than insulators but worse than metals). A transistor has three terminals: when you apply voltage to one, it controls current flow between the other two, acting as an electronic switch or amplifier. Transistors revolutionised everything because they're tiny (modern ones are measured in nanometers), fast (switching billions of times per second), reliable (no moving parts or heat-emitting filaments), energy-efficient, and cheap to mass-produce. Your phone contains billions of transistors. They're in computers, radios, TVs, cars, medical devices, basically every modern electronic device. Without transistors, the digital age wouldn't exist.  at Bell LabsBell Telephone Laboratories, one of the most influential research institutions in history (1925-1996). Founded by AT&T and Western Electric in Murray Hill, New Jersey. Employed some of the greatest scientific minds and produced groundbreaking inventions: transistor (1947), laser (1958), solar cell (1954), communications satellite (Telstar, 1962), Unix operating system (1969), C programming language (1972), CCD sensor (used in digital cameras), and foundational work in information theory, radio astronomy, and quantum computing. Nine Nobel Prizes were awarded for work done there. At its peak, Bell Labs represented what scientific research could achieve with long-term funding and freedom to explore. It declined after AT&T's breakup in 1984, eventually absorbed into Nokia. in 1947 revolutionised computing. Transistors were smaller, faster, more reliable, and consumed far less power than vacuum tubes. By the late 1950s, transistor-based computers like the IBM 1401A transistor-based computer introduced in 1959 that became the best-selling computer of the early 1960s. Over 12,000 units were sold or leased, more than all other computers combined at the time. Why was it so successful? It was affordable (leasing for $2,500 per month), reliable, easy to program, and perfectly sized for medium businesses. It used magnetic core memory and could process punch cards at high speed. Businesses used it for payroll, inventory, accounting, and customer records. Before the 1401, only large corporations and universities could afford computers. The 1401 brought computing to mainstream business. Its success funded IBM's development of more advanced systems.

at Bell LabsBell Telephone Laboratories, one of the most influential research institutions in history (1925-1996). Founded by AT&T and Western Electric in Murray Hill, New Jersey. Employed some of the greatest scientific minds and produced groundbreaking inventions: transistor (1947), laser (1958), solar cell (1954), communications satellite (Telstar, 1962), Unix operating system (1969), C programming language (1972), CCD sensor (used in digital cameras), and foundational work in information theory, radio astronomy, and quantum computing. Nine Nobel Prizes were awarded for work done there. At its peak, Bell Labs represented what scientific research could achieve with long-term funding and freedom to explore. It declined after AT&T's breakup in 1984, eventually absorbed into Nokia. in 1947 revolutionised computing. Transistors were smaller, faster, more reliable, and consumed far less power than vacuum tubes. By the late 1950s, transistor-based computers like the IBM 1401A transistor-based computer introduced in 1959 that became the best-selling computer of the early 1960s. Over 12,000 units were sold or leased, more than all other computers combined at the time. Why was it so successful? It was affordable (leasing for $2,500 per month), reliable, easy to program, and perfectly sized for medium businesses. It used magnetic core memory and could process punch cards at high speed. Businesses used it for payroll, inventory, accounting, and customer records. Before the 1401, only large corporations and universities could afford computers. The 1401 brought computing to mainstream business. Its success funded IBM's development of more advanced systems.  began replacing vacuum tube machines. These "second-generation" computers were used for business data processingUsing computers to handle routine business operations: payroll (calculating wages, taxes, deductions for thousands of employees), inventory management (tracking stock levels, reorder points, supplier records), accounting (general ledger, accounts payable and receivable, financial reports), customer records (names, addresses, purchase history), and billing (generating invoices, tracking payments). Before computers, these tasks required rooms full of clerks with paper forms, filing cabinets, and mechanical calculators. A single payroll run might take a week. Computers reduced this to hours, increased accuracy, and freed humans from repetitive calculations. Companies like insurance firms, banks, manufacturing plants, and retailers were early adopters. This created the "data processing" industry and made computing economically essential, not just scientifically interesting., scientific calculation, and early airline reservation systemsThe first computerised airline booking systems, revolutionising how flights were sold. Before computers, airline reservations were handled manually: customers called or visited ticket offices, agents checked paper flight schedules and availability charts, made reservations by phone with central offices, and manually updated records. This was slow (could take hours), error-prone (double-booking was common), and limited capacity. American Airlines and IBM developed SABRE (Semi-Automated Business Research Environment) in 1960, one of the largest data processing systems ever built. It could handle 83,000 daily transactions, checking seat availability and making reservations in seconds. SABRE connected 1,200 terminals across the US to a central IBM mainframe. This became the template for all modern booking systems (hotels, car rentals, concerts). It proved computers could handle real-time, mission-critical business operations, not just batch processing..

began replacing vacuum tube machines. These "second-generation" computers were used for business data processingUsing computers to handle routine business operations: payroll (calculating wages, taxes, deductions for thousands of employees), inventory management (tracking stock levels, reorder points, supplier records), accounting (general ledger, accounts payable and receivable, financial reports), customer records (names, addresses, purchase history), and billing (generating invoices, tracking payments). Before computers, these tasks required rooms full of clerks with paper forms, filing cabinets, and mechanical calculators. A single payroll run might take a week. Computers reduced this to hours, increased accuracy, and freed humans from repetitive calculations. Companies like insurance firms, banks, manufacturing plants, and retailers were early adopters. This created the "data processing" industry and made computing economically essential, not just scientifically interesting., scientific calculation, and early airline reservation systemsThe first computerised airline booking systems, revolutionising how flights were sold. Before computers, airline reservations were handled manually: customers called or visited ticket offices, agents checked paper flight schedules and availability charts, made reservations by phone with central offices, and manually updated records. This was slow (could take hours), error-prone (double-booking was common), and limited capacity. American Airlines and IBM developed SABRE (Semi-Automated Business Research Environment) in 1960, one of the largest data processing systems ever built. It could handle 83,000 daily transactions, checking seat availability and making reservations in seconds. SABRE connected 1,200 terminals across the US to a central IBM mainframe. This became the template for all modern booking systems (hotels, car rentals, concerts). It proved computers could handle real-time, mission-critical business operations, not just batch processing..

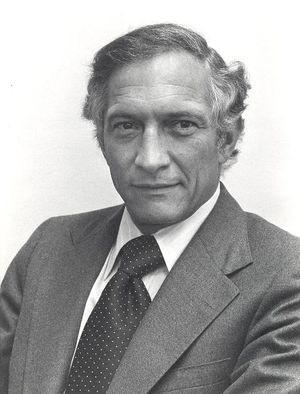

The integrated circuitA complete electronic circuit containing multiple components (transistors, resistors, capacitors, interconnections) all fabricated together on a single piece of semiconductor material. Before integrated circuits, electronic devices were made by individually soldering discrete components onto circuit boards, a time-consuming, expensive, and space-consuming process. Integrated circuits revolutionised electronics by miniaturising entire circuits. Instead of hand-wiring thousands of components, you could etch them onto a chip the size of a fingernail. This made electronics cheaper, smaller, more reliable (fewer connections to fail), and faster (shorter distances for signals to travel). Modern chips contain billions of transistors. Every electronic device today, from pacemakers to spacecraft, relies on integrated circuits. They enabled the entire digital revolution., invented independently by Jack KilbyAmerican electrical engineer (1923-2005) who invented the integrated circuit whilst working at Texas Instruments in 1958. During a company-wide summer holiday, Kilby (a new employee with no vacation time) stayed in the lab and hand-built the first integrated circuit, demonstrating that resistors, capacitors, and transistors could all be made from the same semiconductor material (germanium). His prototype was crude but proved the concept. He won the Nobel Prize in Physics in 2000. His invention, along with Noyce's refinement, launched the modern electronics industry. Without Kilby's insight, computers, smartphones, and the internet wouldn't exist in their current form.  and Robert NoyceAmerican physicist and entrepreneur (1927-1990), co-founder of Fairchild Semiconductor and Intel. In 1959, Noyce invented a practical method for manufacturing integrated circuits using silicon and a planar process (depositing layers and etching patterns). His technique was more manufacturable than Kilby's, allowing mass production. Noyce was a visionary who understood integrated circuits would transform computing. He co-founded Intel in 1968, which became the world's largest semiconductor company. His leadership style emphasised egalitarianism, open communication, and risk-taking, shaping Silicon Valley's culture. Often called the "Mayor of Silicon Valley", he mentored Steve Jobs and countless others. Died at 62 from a heart attack.

and Robert NoyceAmerican physicist and entrepreneur (1927-1990), co-founder of Fairchild Semiconductor and Intel. In 1959, Noyce invented a practical method for manufacturing integrated circuits using silicon and a planar process (depositing layers and etching patterns). His technique was more manufacturable than Kilby's, allowing mass production. Noyce was a visionary who understood integrated circuits would transform computing. He co-founded Intel in 1968, which became the world's largest semiconductor company. His leadership style emphasised egalitarianism, open communication, and risk-taking, shaping Silicon Valley's culture. Often called the "Mayor of Silicon Valley", he mentored Steve Jobs and countless others. Died at 62 from a heart attack.  in 1958-1959, allowed multiple transistors to be fabricatedThe manufacturing process of creating integrated circuits. "Fabrication" or "fab" involves starting with a pure silicon wafer (a thin circular disc), then using photolithography (shining light through masks to transfer patterns), chemical etching, doping (adding impurities to change electrical properties), and depositing thin films of materials. The process happens in ultra-clean "cleanrooms" (a single speck of dust can ruin a chip). It takes weeks and hundreds of steps to build modern chips. Each wafer contains many individual chips. After fabrication, wafers are tested, cut into individual chips (dies), packaged in protective cases with pins or contacts, and tested again. Modern fabrication happens at the nanometer scale, creating features smaller than viruses. It's one of the most complex manufacturing processes ever invented. on a single chip of siliconA small piece of crystalline silicon (a semiconductor element, atomic number 14) onto which an entire electronic circuit is built. Silicon is used because it's abundant (second most common element in Earth's crust after oxygen), has perfect semiconductor properties (neither fully conducts nor fully insulates electricity), can be purified to extreme levels (99.9999999% pure), and its oxide (silicon dioxide) forms naturally as an excellent insulator for separating circuit layers. A "chip" is typically a few millimetres to a few centimetres square, cut from a circular silicon wafer. Despite being smaller than a postage stamp, modern chips contain billions of transistors and miles of microscopic wiring. The entire history of computing since 1960 has been enabled by our ability to etch ever-smaller features onto silicon.

in 1958-1959, allowed multiple transistors to be fabricatedThe manufacturing process of creating integrated circuits. "Fabrication" or "fab" involves starting with a pure silicon wafer (a thin circular disc), then using photolithography (shining light through masks to transfer patterns), chemical etching, doping (adding impurities to change electrical properties), and depositing thin films of materials. The process happens in ultra-clean "cleanrooms" (a single speck of dust can ruin a chip). It takes weeks and hundreds of steps to build modern chips. Each wafer contains many individual chips. After fabrication, wafers are tested, cut into individual chips (dies), packaged in protective cases with pins or contacts, and tested again. Modern fabrication happens at the nanometer scale, creating features smaller than viruses. It's one of the most complex manufacturing processes ever invented. on a single chip of siliconA small piece of crystalline silicon (a semiconductor element, atomic number 14) onto which an entire electronic circuit is built. Silicon is used because it's abundant (second most common element in Earth's crust after oxygen), has perfect semiconductor properties (neither fully conducts nor fully insulates electricity), can be purified to extreme levels (99.9999999% pure), and its oxide (silicon dioxide) forms naturally as an excellent insulator for separating circuit layers. A "chip" is typically a few millimetres to a few centimetres square, cut from a circular silicon wafer. Despite being smaller than a postage stamp, modern chips contain billions of transistors and miles of microscopic wiring. The entire history of computing since 1960 has been enabled by our ability to etch ever-smaller features onto silicon. ![]() . This reduced size and cost whilst increasing reliability. By the mid-1960s, "third-generation" computers using integrated circuits, like the IBM System/360A revolutionary family of computers announced by IBM in 1964, the most important computer architecture in business history. The "360" name represented 360 degrees, a complete circle of applications from small to large. Revolutionary features: all models in the family were compatible (software written for one could run on another, unprecedented at the time), modular design (customers could start small and upgrade), and standardised peripherals. IBM bet the company on it, spending $5 billion (equivalent to $50 billion today), more than the Manhattan Project. It was a massive success, dominating business computing for decades. System/360 established the concept of computer families, backward compatibility, and standardised architectures that persist today. Its design influenced virtually every computer system since.

. This reduced size and cost whilst increasing reliability. By the mid-1960s, "third-generation" computers using integrated circuits, like the IBM System/360A revolutionary family of computers announced by IBM in 1964, the most important computer architecture in business history. The "360" name represented 360 degrees, a complete circle of applications from small to large. Revolutionary features: all models in the family were compatible (software written for one could run on another, unprecedented at the time), modular design (customers could start small and upgrade), and standardised peripherals. IBM bet the company on it, spending $5 billion (equivalent to $50 billion today), more than the Manhattan Project. It was a massive success, dominating business computing for decades. System/360 established the concept of computer families, backward compatibility, and standardised architectures that persist today. Its design influenced virtually every computer system since.  , could handle multiple tasks simultaneously through time-sharing operating systemsSoftware that allows multiple users to share a single computer simultaneously. Before time-sharing, computers ran one program at a time in "batch mode", you submitted your program, waited (hours or days), and received results. Time-sharing gives each user a "time slice" (typically milliseconds), rapidly switching between users so fast it feels like you have the computer to yourself. Developed in the 1960s at MIT and other universities, it revolutionised computing by making computers interactive rather than batch-only. Users could type commands and get immediate responses, enabling programming, debugging, and exploration in real time. This created the first sense of personal interaction with computers, decades before personal computers existed. UNIX, developed at Bell Labs in 1969, was one of the most influential time-sharing systems. Modern operating systems still use these principles, your computer rapidly switches between apps, giving each a slice of processor time..

, could handle multiple tasks simultaneously through time-sharing operating systemsSoftware that allows multiple users to share a single computer simultaneously. Before time-sharing, computers ran one program at a time in "batch mode", you submitted your program, waited (hours or days), and received results. Time-sharing gives each user a "time slice" (typically milliseconds), rapidly switching between users so fast it feels like you have the computer to yourself. Developed in the 1960s at MIT and other universities, it revolutionised computing by making computers interactive rather than batch-only. Users could type commands and get immediate responses, enabling programming, debugging, and exploration in real time. This created the first sense of personal interaction with computers, decades before personal computers existed. UNIX, developed at Bell Labs in 1969, was one of the most influential time-sharing systems. Modern operating systems still use these principles, your computer rapidly switches between apps, giving each a slice of processor time..

The microprocessor and personal computing era (1970s - 1990s):

In 1971, IntelIntegrated Electronics Corporation, founded in 1968 by Gordon Moore and Robert Noyce (who left Fairchild Semiconductor) along with Andy Grove. The name combines "integrated" and "electronics". Started in Mountain View, California, with $2.5 million in funding. Their first products were semiconductor memory chips, but their 1971 invention of the microprocessor (Intel 4004) changed everything. Intel became the world's dominant chip maker, supplying processors for most personal computers through partnerships with IBM and Microsoft. The company pioneered Moore's Law (doubling transistor count every two years), drove the PC revolution, and remains a leading semiconductor manufacturer. Intel's "Intel Inside" marketing campaign in the 1990s made them one of the world's most recognisable brands despite selling to manufacturers, not consumers. released the 4004The Intel 4004, the world's first commercially available microprocessor (a complete CPU on a single chip). Commissioned by Japanese calculator company Busicom, designed by Intel engineer Federico Faggin. Released in November 1971, it contained 2,300 transistors (compared to billions in modern processors), ran at 740 kHz, and could perform 92,000 instructions per second. Despite being designed for calculators, its general-purpose design meant it could be programmed for any computing task. Cost $200 (equivalent to $1,500 today). It was tiny (3mm × 4mm) but had the same computing power as ENIAC, which filled a room. The 4004 proved computers could be miniaturised onto single chips, launching the microprocessor revolution and eventually enabling personal computers, smartphones, and embedded systems everywhere., the first commercially available microprocessorA complete central processing unit (CPU) fabricated on a single integrated circuit chip. Before microprocessors, CPUs required multiple circuit boards or entire cabinets full of components. A microprocessor contains the arithmetic logic unit (performs calculations), control unit (manages instruction execution), and registers (temporary storage), all on one chip. This miniaturisation made computers smaller, cheaper, and more reliable. Microprocessors are programmable (can run any software) and general-purpose (unlike fixed-function chips). They're measured by clock speed (how fast they execute instructions), transistor count (complexity), and architecture (instruction set design). Modern microprocessors contain billions of transistors, multiple cores (independent processors on one chip), cache memory, and specialised units for graphics, encryption, and AI. They power everything from supercomputers to smartwatches.. Containing 2,300 transistors on a single chip, it had the same computing power as ENIAC whilst being small enough to fit in the palm of your hand. This miniaturisation would prove transformative.

The microprocessor enabled the personal computer revolution. In 1975, the Altair 8800The first commercially successful personal computer, sold as a kit by MITS (Micro Instrumentation and Telemetry Systems) in Albuquerque, New Mexico. Named after a star system in Star Trek (the designer's daughter suggested it). It used the Intel 8080 microprocessor, had 256 bytes of memory (expandable), no keyboard or screen (users toggled switches and read LED lights), and cost $439 as a kit ($621 assembled). Despite its primitive interface, it sparked massive interest, selling thousands of units and launching the personal computer industry. Hobbyist clubs formed around it, including the Homebrew Computer Club where Jobs and Wozniak met. The Altair proved there was consumer demand for personal computers, not just business mainframes. Its open architecture encouraged third-party hardware and software, establishing the PC ecosystem model., sold as a kit for hobbyists, became the first commercially successful personal computer. Bill Gates and Paul Allen wrote a BASIC interpreterSoftware that executes BASIC (Beginner's All-purpose Symbolic Instruction Code) programming language commands. BASIC was designed in 1964 as an easy-to-learn language for students. An interpreter reads each line of code and executes it immediately (unlike a compiler which translates entire programs first). Gates and Allen's Altair BASIC (1975) was crucial because the Altair had no software. They wrote it in eight weeks, initially on paper and a simulator (they didn't have an Altair). Their first demonstration used a paper tape loader Gates wrote on the flight to Albuquerque. When it worked on the first try, it was almost miraculous. Altair BASIC let users write programs without toggling binary switches, making the Altair practical. It became Microsoft's first product and established BASIC as the standard programming language for early personal computers. for it, founding MicrosoftMicrosoft Corporation, founded on 4 April 1975 by Bill Gates (19 years old, dropped out of Harvard) and Paul Allen (22, working at Honeywell) in Albuquerque, New Mexico (where MITS, maker of Altair, was based). The name combined "microcomputer" and "software". Their vision: "a computer on every desk and in every home" (radical in 1975 when computers filled rooms). They started by licensing software to hardware makers rather than selling to consumers. Their big break came in 1980 when IBM chose Microsoft to provide an operating system for the IBM PC. Gates bought QDOS (Quick and Dirty Operating System) for $50,000, adapted it, and licensed it to IBM as MS-DOS whilst retaining rights to licence it to other PC makers. This decision made Microsoft the dominant OS provider as the PC industry exploded. Moved to Seattle in 1979. Went public in 1986. By the 1990s, Microsoft dominated with Windows and Office, becoming one of the world's most valuable companies. in the process. In 1976, Steve JobsSteven Paul Jobs (1955-2011), co-founder of Apple, Pixar, and NeXT. Adopted at birth, grew up in California's Santa Clara Valley (later Silicon Valley). Dropped out of Reed College after one semester but audited calligraphy classes, which influenced Apple's focus on typography. Worked at Atari, travelled to India seeking enlightenment, and co-founded Apple in his parents' garage in 1976 at age 21. Known for perfectionism, design obsession, and reality distortion field (convincing people impossible things were achievable). Forced out of Apple in 1985, founded NeXT (whose OS became macOS) and bought Pixar (later sold to Disney for $7.4 billion). Returned to Apple in 1997 when it was near bankruptcy, led one of business history's greatest turnarounds. Launched iMac (1998), iPod (2001), iPhone (2007), and iPad (2010), transforming multiple industries. Died at 56 from pancreatic cancer. His keynote presentations and product launches became cultural events. Believed in intersection of technology and liberal arts. and Steve WozniakStephen Gary Wozniak (born 1950), co-founder of Apple and the engineering genius behind the Apple I and Apple II. Nicknamed "Woz". Grew up in California, son of an engineer, obsessed with electronics from childhood. Met Jobs through a mutual friend in 1971. Whilst Jobs provided vision and business sense, Wozniak was the technical wizard who actually built the computers. Designed the Apple I (1976) and Apple II (1977) almost entirely himself, creating elegant, efficient designs that used fewer chips than competitors. The Apple II's colour graphics, sound, and expandability made it the first truly successful personal computer, selling millions. Known for his playful personality, generosity (he gave stock to early Apple employees who weren't fairly compensated), and lack of interest in business politics. Left Apple's day-to-day operations in 1985 but remains an employee and brand ambassador. Funded educational technology initiatives. One of history's greatest hardware engineers. introduced the Apple IThe first Apple computer, hand-built by Wozniak in 1976. Unlike the Altair, it came as an assembled circuit board (not a kit), though users needed to add their own case, keyboard, and display. It used the MOS Technology 6502 processor, had 4KB of RAM (expandable to 65KB), and included a video interface that could display on a standard TV. Revolutionary feature: you could type on a keyboard and see the output on a screen, not toggle switches and read lights. Cost $666.66 (Wozniak liked repeating digits). Only 200 were made, mostly sold through Byte Shop in Mountain View. Today, surviving units sell for hundreds of thousands of dollars. The Apple I proved Wozniak's design philosophy: elegant, minimal, user-friendly. It was technically impressive but commercially limited (needed assembly, no case). It paved the way for the far more successful Apple II., followed by the more user-friendly Apple IIReleased in 1977, the first highly successful personal computer for both home and business use. Wozniak's masterpiece of engineering efficiency and user-friendliness. Key innovations: came fully assembled in a plastic case (not a kit), colour graphics (revolutionary for the time), sound capabilities, eight expansion slots (users could add memory, disk drives, printers), and compatibility with the 5.25-inch floppy disk drive (1978), enabling easy software distribution. It ran at 1 MHz with 4KB RAM (expandable to 48KB). Cost $1,298. The Apple II was approachable: non-technical users could set it up and use it. VisiCalc (1979), the first spreadsheet program, ran on Apple II, making it essential for businesses. The Apple II line dominated education and home computing through the 1980s, selling nearly 6 million units over 16 years. Its success funded Apple's later projects. The GUI and mouse-driven design philosophy tested on Apple II influenced the Macintosh. in 1977.

The IBM PCIBM Personal Computer, released in August 1981. IBM's entry into personal computing legitimised the PC industry (if IBM, the dominant business computer company, was making them, PCs were serious business tools, not just hobbyist toys). Built in just one year by a small team in Boca Raton, Florida. Used Intel 8088 processor, 16KB RAM (expandable to 640KB), and cost $1,565. Revolutionary decision: IBM used off-the-shelf components and published technical specifications, creating an open architecture anyone could copy. This spawned "IBM PC compatibles" (clones) that accelerated industry growth but eventually eroded IBM's market share. Ran MS-DOS (Microsoft Disk Operating System). The PC's business focus, IBM's brand reputation, and open design made it the industry standard. Within a few years, "PC" meant "IBM PC or compatible", marginalising other designs like Commodore and Atari. IBM's PC architecture, with its expansion slots and standard components, became the template for modern desktop computers., released in 1981 with an operating systemSoftware that manages computer hardware and provides services for application programs. The OS acts as intermediary between users/applications and hardware. Core functions: process management (running multiple programs), memory management (allocating RAM), file system (organising data storage), device drivers (communicating with hardware like printers and displays), and user interface (command line or graphical). Without an OS, every program would need to directly control hardware (impossible complexity). The OS abstracts this: programs ask the OS to save a file, the OS handles the physical disk operations. Examples: MS-DOS (command-line, 1981), Windows (graphical, 1985), macOS (Unix-based, 2001), Linux (open-source, 1991), iOS (mobile, 2007), Android (mobile, 2008). Modern OSs handle security, networking, multitasking, and provide APIs for developers. The OS is the foundation of the computing experience. licensed from Microsoft (MS-DOSMicrosoft Disk Operating System, released in 1981 for the IBM PC. A command-line OS where users typed text commands (like "dir" to list files, "copy" to copy files). Microsoft bought QDOS (Quick and Dirty Operating System) from Seattle Computer Products for $50,000, modified it, and licensed it to IBM. Crucially, Microsoft retained rights to licence it to other PC makers, whilst IBM could only bundle it with IBM PCs. As PC clones proliferated, MS-DOS became ubiquitous, making Microsoft the dominant OS provider. MS-DOS was simple (single-tasking, no graphical interface, limited memory management) but adequate for early PCs. Its command structure influenced Windows (which initially ran on top of DOS) and established Microsoft's OS dominance. Lasted until Windows 95/98 fully integrated graphical interfaces. MS-DOS made Microsoft billions and established the PC software ecosystem.), established the standard architectureA consistent set of hardware and software specifications that different manufacturers can follow, ensuring compatibility. The IBM PC's architecture defined: processor type (Intel x86 family), expansion bus (allowing add-on cards for graphics, sound, networking), memory layout (640KB conventional memory, expanded/extended memory), BIOS (basic input/output system for hardware initialization), and peripheral interfaces (keyboard, display, disk drives). Because IBM published specifications, other companies could build "compatible" machines that ran the same software. This created a positive feedback loop: more compatible hardware meant more software developed, which attracted more hardware makers. Standards enable economies of scale, competition, and rapid innovation. The PC standard architecture persists today, modern Windows PCs are evolutionary descendants of the 1981 IBM PC. Contrast with Apple's closed architecture, where Apple controls hardware and software, limiting compatibility but enabling tighter integration. that would dominate personal computing for decades. The introduction of the graphical user interfaceA visual way of interacting with computers using windows, icons, menus, and a pointer (WIMP), instead of typing text commands. GUI represents files as icons you can drag and drop, programs as windows you can resize and move, and actions as buttons you can click. Contrast with command-line interfaces where you type "copy file1.txt file2.txt". GUIs make computers intuitive: you don't need to memorise commands or syntax. The concept originated at Stanford Research Institute (Douglas Engelbart's 1968 demo) and Xerox PARC (Xerox Alto, 1973), which invented overlapping windows, icons, and the mouse. Apple commercialised it with the Macintosh (1984). Microsoft followed with Windows. GUIs enabled mass adoption of computers by non-technical users. Today, nearly all consumer computing uses GUIs (desktops, smartphones, tablets). The visual metaphor of a "desktop" with "files" and "folders" has shaped how billions of people understand computing., pioneered by Xerox PARCPalo Alto Research Center, Xerox Corporation's research lab founded in 1970 in California. One of the most influential research labs in computing history, despite Xerox failing to commercialise most of its inventions. PARC researchers invented: the graphical user interface (Xerox Alto computer, 1973), the mouse, overlapping windows, icons, Ethernet (local area networking), laser printing, object-oriented programming (Smalltalk language), and WYSIWYG (what you see is what you get) text editing. In 1979, Steve Jobs visited PARC and saw the GUI demo. He later said it was like "seeing the future". Apple adapted PARC's ideas for the Lisa and Macintosh. Microsoft later did the same for Windows. PARC's researchers were visionary but Xerox, focused on photocopiers, didn't recognise the commercial potential. PARC became the cautionary tale of brilliant research squandered by corporate short-sightedness. Many PARC innovations became industry standards decades later. and popularised by Apple's MacintoshApple's revolutionary computer, released in January 1984 with the famous "1984" Super Bowl ad directed by Ridley Scott. "Macintosh" is a variety of apple (spelled "McIntosh"), chosen by Apple employee Jef Raskin. The Mac was the first affordable computer with a GUI and mouse for mainstream consumers. Featured a 9-inch black-and-white screen, ran at 8 MHz with 128KB RAM, and cost $2,495. Introduced fonts, graphics, and point-and-click interaction to the masses. Steve Jobs famously obsessed over every detail, from screen fonts (inspired by his calligraphy class) to the beige colour. Initially struggled due to limited software and insufficient memory, but the Mac's design philosophy (simplicity, elegance, user-friendliness) became Apple's identity. Desktop publishing (with PageMaker and LaserWriter) became the Mac's killer application. The Mac line continues today as macOS, maintaining design principles from 1984: intuitive interface, aesthetic focus, and seamless hardware-software integration. "Apple" itself comes from Jobs's time on an apple farm commune and his belief it sounded friendly, non-threatening, and appeared before Atari in the phone book. in 1984 and later Microsoft Windows, made computers accessible to non-technical users.

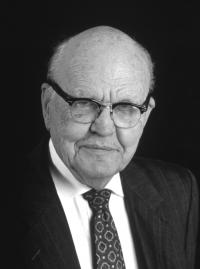

Moore's LawAn observation made by Gordon Moore in a 1965 paper predicting that the number of transistors on an integrated circuit would double approximately every two years (he initially said annually, later revised to every two years). This wasn't a law of physics but an observation about the pace of technological and manufacturing progress in semiconductors. How was it discovered? Moore, then at Fairchild Semiconductor, plotted the complexity (transistor count) of the most advanced chips from 1959 to 1965 on a graph and noticed exponential growth. He extrapolated this trend forward. Remarkably, the semiconductor industry used Moore's observation as a target, investing in research and manufacturing to maintain the pace. Moore's Law held for over 50 years, enabling exponential increases in computing power whilst costs per transistor fell. By the 2010s, it began slowing as transistors approached atomic scales and physical limits. Moore's Law drove the entire digital revolution, making computers faster and cheaper every year, enabling everything from smartphones to AI., observed by Intel co-founder Gordon MooreGordon Earle Moore (1929-2023), American chemist, businessman, and co-founder of Intel. PhD in chemistry and physics from Caltech. Joined Shockley Semiconductor (founded by transistor inventor William Shockley), then left with the "Traitorous Eight" to found Fairchild Semiconductor in 1957. In 1968, co-founded Intel with Robert Noyce. Whilst Noyce was the visionary leader, Moore was the technical strategist. His 1965 paper predicting exponential growth in chip complexity became "Moore's Law", the defining principle of the semiconductor industry. As Intel CEO (1979-1987) and chairman (1987-1997), he guided Intel's dominance in microprocessors. Donated over $1 billion to scientific research and environmental causes. Died in 2023 at age 94. His observation shaped the trajectory of the entire information age. in 1965, predicted that the number of transistors on a chip would double approximately every two years, leading to exponential increases in computing power. This held remarkably true for decades. By 1993, Intel's Pentium processorIntel's landmark processor released in 1993, marking a major advancement in PC performance. Named "Pentium" (from Greek "penta" meaning five, as it was Intel's fifth-generation x86 architecture) rather than "586" because Intel couldn't trademark numbers. Contained 3.1 million transistors, ran at 60-200 MHz (compared to 4004's 740 kHz), and could perform around 100 million instructions per second. Featured superscalar architecture (could execute multiple instructions simultaneously), separate caches for data and instructions, and improved floating-point math. The Pentium became synonymous with PC performance in the 1990s. Intel's massive marketing campaign ("Intel Inside" stickers on PCs) made Pentium a household name, unusual for a computer component. The name strategy continued (Pentium II, III, IV). A famous early bug in the floating-point divider (discovered in 1994) led to a costly recall, teaching Intel about quality control. Pentium processors dominated the PC market through the late 1990s. contained 3.1 million transistors. Today's processors contain tens of billions.